Concurrency Control in Transaction Management: Ensuring Data Integrity

Concurrency control in transaction management is essential to maintain the integrity and accuracy of data in a multi-user environment where multiple transactions may run simultaneously. It ensures that transactions are executed in a controlled manner, avoiding potential issues such as lost updates, dirty reads, and inconsistent retrievals. In this blog, we’ll delve into the problems caused by uncontrolled concurrent transactions and the protocols used to prevent these issues.

Problems of Concurrency Control

When transactions are executed concurrently without control mechanisms, several problems can arise. The three most common issues include:

1. Lost Update Problem

The lost update problem occurs when two transactions, T1 and T2, both access the same data item, update it, and overwrite each other’s changes. This results in one transaction’s update being lost.

Example:

Consider two transactions, X and Y, both updating the balance of a bank account.

- At time t1, Transaction X reads the account balance.

- At time t2, Transaction Y reads the same balance.

- At time t3, Transaction X updates the balance based on its earlier read.

- At time t4, Transaction Y updates the balance, unaware of X's earlier change.

The update made by Transaction X is lost because Y overwrites it.

2. Dirty Read Problem

The dirty read problem occurs when one transaction reads data that has been modified by another transaction that has not yet committed. If the second transaction fails or rolls back, the first transaction ends up using uncommitted, potentially incorrect data.

Example:

- At time t1, Transaction Y updates a record in the database.

- At time t2, Transaction X reads the updated record.

- At time t3, Transaction Y aborts and rolls back its update.

Now, Transaction X holds a value that is not part of the stable, committed database.

3. Inconsistent Retrievals (Unrepeatable Read)

Inconsistent retrievals happen when a transaction reads a set of data and another transaction modifies the same data before the first transaction completes. This leads to inconsistent results when the data is read again.

Example:

Suppose two transactions, X and Y, are processing balances from several accounts.

- Transaction X starts summing account balances.

- Meanwhile, Transaction Y updates some of those balances.

- When Transaction X reads the balances again, they reflect the new values, leading to an inconsistent sum.

Transaction X may now have an incorrect total due to the changes made by Transaction Y.

Concurrency Control Protocols

Concurrency control protocols ensure that transactions maintain atomicity, isolation, and serializability, which are crucial for the correctness of concurrent transactions. These protocols can be categorized into lock-based and timestamp-based protocols.

1. Lock-Based Protocols

Lock-based protocols regulate access to data by ensuring that transactions obtain locks before performing read or write operations. Locks can be shared (read-only) or exclusive (read and write).

- Shared Lock (S): Multiple transactions can hold shared locks on the same data item, but none can modify the data.

- Exclusive Lock (X): Only one transaction can hold an exclusive lock on a data item at a time, and it can both read and write the data.

Lock Protocol Types:

-

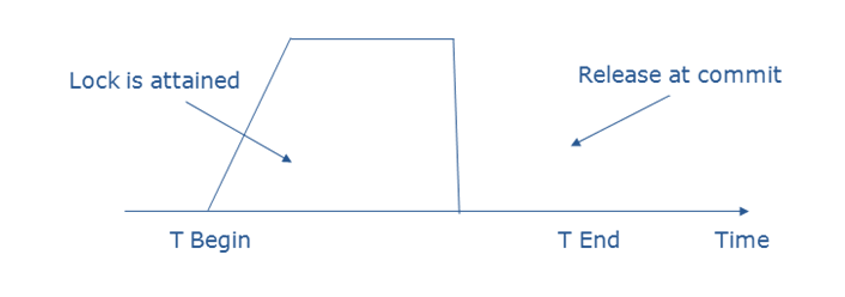

Simplistic Lock Protocol: Transactions request a lock before accessing data and release it upon completing the transaction. This is the simplest form of concurrency control.

-

Pre-Claiming Lock Protocol: Before starting, the transaction requests locks on all data items it will access. If all locks are granted, the transaction proceeds; otherwise, it waits.

-

Two-Phase Locking (2PL): This protocol ensures serializability by dividing the transaction's execution into two phases:

- Growing Phase: Locks are acquired but not released.

- Shrinking Phase: Locks are released, but no new locks are acquired.

Example:

In a banking system, Transaction T1 acquires locks on accounts A and B in the growing phase, updates their balances, and then releases the locks in the shrinking phase, ensuring no other transaction can access these accounts simultaneously

2. Timestamp-Based Protocols

Timestamp-based protocols ensure serializability by assigning a unique timestamp to each transaction, based on when the transaction starts. This timestamp is used to order transactions.

Basic Timestamp Ordering Protocol:

-

Read Operation: When a transaction Ti issues a read on data item X, the system checks if any newer transaction has written to X. If so, Ti is rolled back. Otherwise, the read is allowed, and the read timestamp of X is updated.

-

Write Operation: When a transaction Ti issues a write on data item X, the system checks the read and write timestamps of X. If any conflict is detected with a more recent transaction, Ti is rolled back; otherwise, the write is allowed.

Example:

- Transaction T1 (TS=100) attempts to read a data item A.

- Transaction T2 (TS=200) reads a data item B.

- Transaction T1 writes to C.

- Transaction T3 (TS=300) tries to read B, but since it has a more recent timestamp, the operation is allowed.

- T2 tries to write B but fails, as it conflicts with a read made by T3.

Thomas' Write Rule

Thomas' Write Rule is an optimization of the basic timestamp ordering protocol. It states that if a transaction Ti's write timestamp is older than the last write on a data item X, Ti’s write operation is skipped, as it would be irrelevant.

Advantages and Disadvantages of Timestamp-Based Protocols

- Advantages: Ensures serializability, avoids deadlocks, and transactions do not wait.

- Disadvantages: Can result in frequent rollbacks and may not ensure a recoverable schedule.

Conclusion

Concurrency control is vital for ensuring data consistency and integrity when multiple transactions occur simultaneously in a database system. By implementing lock-based or timestamp-based protocols, systems can mitigate the risks of lost updates, dirty reads, and inconsistent retrievals, ensuring that the database remains in a valid state even under heavy transaction loads.